How Routing Value to Signals Instead of Verified Contribution Created Civilization-Scale Insolvency

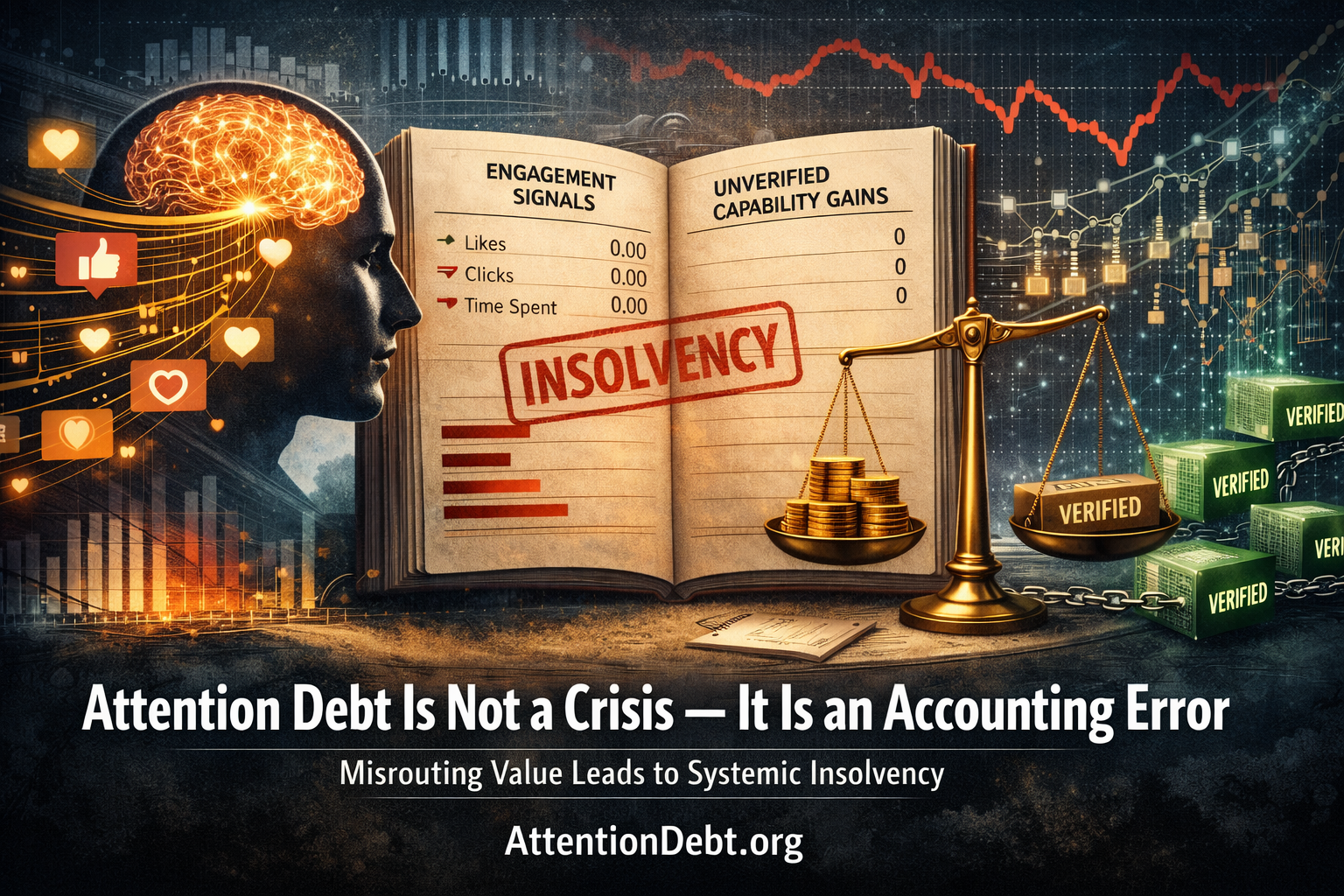

Attention debt is typically discussed as psychological crisis, platform manipulation, or cultural decay. Commentators describe users as addicted, platforms as predatory, society as fragmenting under digital pressure. These framings, while capturing real phenomena, miss the structural problem underneath. Attention debt is not fundamentally a behavioral pathology requiring ethical correction. It is an accounting error at civilization scale—value systematically routed to the wrong variables, debts incurred without proper tracking, claims accumulated without verification mechanisms.

This distinction matters because misdiagnosing the problem leads to ineffective interventions. When attention debt is framed as ethical failure, solutions target behavior modification: digital detox, mindfulness training, usage limits, design ethics. But accounting errors cannot be fixed through moral appeals any more than bank insolvency can be solved through depositor therapy. The ledger itself must be corrected.

When platforms extract attention at rates vastly exceeding sustainable cognitive capacity—creating leverage ratios on the order of 5:1 or higher—while routing value to engagement signals rather than capability increases, systematic debt accumulation becomes inevitable. The mathematical result was inevitable: systematic debt accumulation regardless of anyone’s intentions. This is not addiction in the psychological sense. This is insolvency in the accounting sense.

This article demonstrates why attention debt exists as structural phenomenon, why ethical approaches cannot address it, and what actual correction requires. The answer is not better platform behavior or stronger user willpower. The answer is implementing the missing infrastructure that makes contribution verification possible—what’s specified in the Reciprocity Principle as double-entry bookkeeping for human capability.

Part 1: What a Debt Actually Is

The Accounting Definition

A debt is not an emotion. It is not guilt, regret, or moral failing. A debt is a precise mathematical relationship: a claim on future value based on present resource transfer. When a bank loans ten thousand dollars to a borrower, the bank holds claim on principal plus interest while the borrower holds obligation to generate value covering both. The ledger tracks both sides of this transaction. Repayment occurs when verified value returns sufficient to settle the claim. The borrower may experience stress or anxiety, but these emotions do not constitute the debt—the numerical imbalance does.

This accounting precision matters because debt exists independent of anyone’s feelings about it. A borrower in denial still owes the money. A lender choosing forgiveness doesn’t erase the ledger entry—it simply transfers the loss. The mathematics persist regardless of psychological states or ethical judgments.

Attention as Actual Debt, Not Metaphor

When platforms extract user attention, a similar mathematical relationship emerges. Users provide cognitive focus, a demonstrably limited resource. Sustained attention is a bounded resource with measurable depletion and recovery costs. Users also provide time allocation from a finite daily budget, plus behavioral data that platforms use for algorithm training. These constitute genuine resource transfers—not hypothetical, not metaphorical, but actual expenditure of scarce assets.

In exchange for this resource transfer, platforms create expectation of future value through user experience design and messaging patterns. Interface patterns suggest capability increase: ”Learn a new skill,” ”Connect with experts,” ”Expand your knowledge.” Marketing and design create expectation that value scales proportionally to investment: spend more time, gain more benefit. Users reasonably expect that attention invested will yield capability that persists beyond the interaction itself.

But here the accounting breaks down. Platforms measure engagement—duration, clicks, sessions—as proxy for value delivered. They track completion rates, assuming finished content equals absorbed content. They monitor popularity metrics, treating viral spread as indicator of quality. Yet none of these measurements verify the thing they’re supposed to track: did the user’s capability actually increase in ways that persist and propagate?

Without this verification, the transaction remains unbalanced. Resources transferred, value expected, but delivery unconfirmed. In traditional accounting, this creates accounts receivable—claims awaiting settlement. If settlement never occurs and claims accumulate indefinitely, the result is insolvency. The parallel to attention debt is exact, not approximate.

Why This Precision Matters

Critics sometimes dismiss ”attention debt” as loose terminology, arguing it’s not real debt like financial obligation. This objection misunderstands double-entry bookkeeping. Consider corporate accounts receivable: customers receive products, companies hold payment claims, and until payment is verified, debt exists on the ledger regardless of what anyone calls it. If verification never occurs, insolvency results whether or not participants use formal debt terminology.

The attention relationship follows identical structure. Users transfer attention resources, hold claims on capability gains, and until capabilities are verified through temporal persistence and cascade effects, debt accumulates on the collective ledger. Calling it something else—engagement imbalance, value asymmetry, extraction surplus—does not change the underlying mathematics. The ledger remains unbalanced. The claims remain unverified. The insolvency compounds.

Part 2: Why This Cannot Be Fixed With Better Ethics

The Category Error

When attention debt is framed as ethical crisis, proposed solutions target behavior modification at three levels. Individuals receive advice about digital detox programs, mindfulness training, and self-imposed usage limits. Platforms face pressure to adopt ”humane technology” principles, add screen time warnings, and throttle addictive features. Policymakers consider regulatory frameworks, age restrictions, and mandatory disclosures about platform effects.

These interventions address symptoms while leaving the structural error intact. It’s equivalent to addressing bank insolvency through therapy for depositors rather than correcting the balance sheet. The emotional experience of users matters—anxiety, compulsion, cognitive overload are real harms requiring attention. But these harms are effects of the accounting error, not causes of it. Treating effects cannot fix causes any more than pain medication cures the underlying disease.

The fundamental problem is categorical. Accounting errors require accounting solutions. Ethical frameworks address intentions and responsibilities. Accounting frameworks address measurement and verification. When value routes to wrong variables at scale, no amount of good intention changes the mathematical consequence. Banks with excellent customer service still fail if they cannot track deposits and withdrawals. Platforms with humane design still accumulate attention debt if they cannot verify capability increases.

Why Each Ethical Fix Falls Short

Consider mindfulness interventions. Teaching users to ”be present” during platform interactions aims to improve the quality of attention spent. But mindfulness does not change what gets measured and valued. If a user spends fifteen hours weekly on platforms that generate zero verified capability increase, being mindfully present during those fifteen hours does not balance the ledger. Attention still transfers. Value delivery remains unverified. Debt continues accumulating. Being mindfully insolvent is still insolvency—just with better awareness of the problem.

Regulatory approaches encounter similar limits. Laws requiring ”ethical design” must specify ethical toward what outcome. If engagement remains the routing key—even thoughtfully designed engagement—then value still flows to signals rather than verified contribution. Slower extraction through friction-added interfaces reduces the rate of debt accumulation but does not change the fundamental mismeasurement. It’s like a bank that processes transactions more slowly but still fails to track whether deposits and withdrawals actually occurred. The pace changes; the structural error persists.

Platform self-regulation through design improvements follows the same pattern. Removing infinite scroll, eliminating dark patterns, adding usage reminders—these reduce extraction velocity. They do not change what gets measured. Content that keeps users engaged still gets prioritized over content that increases capability, because platforms lack infrastructure to verify the latter. The optimization target remains unchanged: maximize signal capture rather than verify contribution. Gentler extraction is still extraction if it remains unverified.

What Ethics Cannot Address

Ethics addresses questions of intentionality, responsibility, and moral obligation. Should platforms design for addiction? Should users practice self-control? Should regulators intervene? These are important questions with real stakes. But they are downstream of a more fundamental question: what variable determines value routing?

Accounting addresses this question through measurement and verification. Double-entry bookkeeping succeeded not by making bankers more ethical but by creating infrastructure that made theft and error detectably costly. The system did not require moral transformation—it required structural precision that made certain behaviors unprofitable. Good people benefited from reduced fraud; bad people faced increased detection. The mechanism succeeded independent of anyone’s ethics.

Attention debt requires equivalent structural intervention. Not because ethics are irrelevant, but because ethical frameworks cannot specify verification protocols. They cannot define what counts as genuine capability increase versus performance theater. They cannot measure temporal persistence or cascade multiplication. They cannot route value to verified contribution rather than engagement proxies. These are infrastructure questions requiring engineering answers, not moral questions requiring philosophical consensus.

The Counterfactual Test

Consider a thought experiment that isolates structure from intention. Imagine two platforms with identical characteristics: same ethical principles, same user demographics, same content types, same stated mission to increase human capability. The only difference is measurement infrastructure.

Platform A routes value to engagement metrics—time spent, clicks generated, content completed. Platform B routes value to verified contribution—temporal persistence confirmed, cascade effects measured, bidirectional value exchange documented. Both platforms have equally ethical leadership, equally thoughtful design teams, equally committed users.

After five years, their outcomes diverge dramatically. Platform A accumulates attention debt despite good intentions because its routing mechanism optimizes for signals that diverge from capability. Platform B maintains balanced ledger despite having no moral superiority because its routing mechanism verifies what it claims to measure. The structural difference—what gets measured and routed—determines the outcome independent of anyone’s ethics or intentions.

This is not hypothetical. This is how systems work. Structure determines long-term outcomes more powerfully than intentions determine them. Ethical people operating unbalanced ledgers create debt. Average people operating balanced ledgers create sustainability. The infrastructure matters more than the morality.

Part 3: The Real Error—Routing to Proxies

The Measurement Problem

The fundamental accounting error underlying attention debt is precise: value routes to signals that proxy for contribution rather than to verified contribution itself. This substitution occurred because direct contribution measurement was historically impossible. When verification technology does not exist, proxies become necessary even though they remain approximations.

Before digital platforms, measurement relied on time-based proxies. Hours in classroom implied learning. Years in position implied capability. Credentials earned implied competence. These proxies were reasonable given technological constraints—there was no way to directly verify whether someone’s understanding persisted six months after a course, whether their decision-making improved independently of institutional authority, whether their capability propagated to others through genuine transfer versus mere information repetition.

Digital platforms inherited this proxy-based approach and scaled it massively. Time spent on platform became proxy for value delivered. Completion rates became proxy for learning. Popularity metrics became proxy for quality. But scaling a proxy does not improve its accuracy—it scales whatever error the proxy contains. If time spent correlates poorly with capability increase, measuring time spent for billions of interactions creates billions of mismeasurements.

The Three Critical Proxies

Engagement duration serves as primary proxy for attention value. Platforms track time spent, clicks generated, sessions per day, assuming more engagement indicates more value delivered to users. But engagement optimizes independently of value delivery. Infinite scroll generates engagement without delivering capability. Algorithmic feeds generate engagement through novelty seeking, not understanding building. Notification systems generate engagement by interrupting focus, the opposite of deep learning. Engagement measures platform success at capturing attention; it does not measure platform success at increasing capability.

Completion rates proxy for learning. Platforms measure whether videos play to end, whether courses finish, whether articles scroll through, assuming completion means content absorbed. But completion correlates with content length and interface design, not with retention or understanding. Short videos complete easily while teaching nothing. Long videos auto-play while users multitask. Course completion happens through rote answers rather than concept mastery. Completion indicates that content reached its technical endpoint; it does not indicate that learning occurred in the user.

Popularity metrics proxy for contribution quality. Likes, shares, comments, and virality get treated as signals of valuable content. But popularity optimizes for emotional reaction rather than capability transfer. Outrage spreads faster than insight because emotional arousal triggers sharing independent of content quality. Simplification spreads faster than complexity because cognitive ease drives engagement. Confirmation bias spreads familiar ideas faster than challenging ones. Popularity measures content’s success at triggering social spreading; it does not measure content’s success at increasing the capabilities of those who engage with it.

Why Proxies Compound Error

Using proxies as routing keys creates cascading failures. First, the measurement error: proxies capture signals that correlate imperfectly or not at all with actual value. Second, the routing error: value flows to whoever optimizes for the proxy rather than for actual contribution. Third, the incentive error: optimizing for proxy becomes dominant strategy since that’s what gets rewarded. Fourth, the selection error: successful content gets defined as proxy-optimized rather than contribution-maximizing. Fifth, the feedback error: systems learn from proxy signals, amplifying whatever the proxy measures while ignoring what it misses.

By the fifth iteration, correlation between measurement and reality may approach zero. Engagement-maximizing content bears no relationship to capability-increasing content. Completion-optimized interfaces bear no relationship to learning-optimized interfaces. Popularity-driven algorithms bear no relationship to quality-driven algorithms. The ledger tracks completely wrong variables. Every transaction gets recorded incorrectly. Every routing decision bases itself on false information.

This is not hyperbole. This is accounting precision. When the variable you measure diverges from the variable you claim to measure, and when value routes based on the first variable while users expect the second, systematic debt accumulation becomes mathematically inevitable. Platforms extract attention based on engagement. Users expect capability based on design implications. The gap between extraction and delivery creates debt. The longer this mismeasurement continues, the more debt accumulates. Eventually, collective insolvency results—users providing more attention than they can sustainably allocate while receiving less verified capability than their investment justified.

The Ledger Inversion Theorem

This pattern—routing value to proxy variable instead of the variable it claims to represent—produces predictable structural consequence. We can state this formally:

The Ledger Inversion Theorem: When value routes to a proxy variable instead of the variable it claims to represent, debt accumulates regardless of intentions, ethics, or regulation. The accumulation rate depends only on the divergence between proxy and actual variable, multiplied by transaction volume.

This is not policy prescription. This is systems observation. If you route value to signals claiming they represent contribution, but those signals diverge from actual contribution, the ledger becomes unbalanced. Intentions do not matter. Ethics do not matter. The mathematics of misrouting determines the outcome.

The theorem explains why attention debt persists despite ethical platforms, disciplined users, and regulatory frameworks. As long as engagement proxies for value, completion proxies for learning, and popularity proxies for contribution—and as long as these proxies diverge from what they claim to represent—debt accumulates. The only question is rate, not inevitability.

This leads to a structural conclusion: any system that routes value to proxy variables after verification infrastructure exists operates in structural contradiction to its claimed purpose. Once verification becomes possible, continued routing to unverified proxies is not a defensible alternative approach—it is accounting error with predictable insolvency outcome.

Part 4: Reciprocity Principle as Ledger Correction

What Correct Accounting Requires

Fixing an accounting error requires four elements: identifying the correct variable to measure, implementing double-entry bookkeeping that tracks both sides of transactions, creating verification mechanisms that confirm the tracked values are accurate, and automating enforcement so corrections happen systematically rather than through voluntary compliance. The Reciprocity Principle provides all four—not as policy recommendation but as infrastructure specification.

Verified Contribution as Correct Variable

Instead of routing value to engagement signals, completion proxies, or popularity metrics, value must route to verified capability increase in independent agents that persists temporally and propagates through networks. This is not arbitrary. This is what ”contribution” actually means in precise terms: did the receiving party gain capability that demonstrates three characteristics?

Temporal persistence, verified through what’s called Persisto Ergo Didici protocol, asks whether capability endures three months or more post-acquisition. Short-term retention, the kind achieved through cramming or rote memorization, fails this test. Only genuinely internalized capability—the kind that becomes part of someone’s available cognitive tools—persists across months. Time provides the filter that separates performance theater from actual learning. Someone can mimic understanding in the moment, but if that understanding has evaporated three months later, no real capability was transferred.

Cascade multiplication, verified through Cascade Proof protocol, asks whether the receiving party can enable independent capability in others. Linear information transfer—where person A tells person B something, and B can repeat it—indicates shallow transmission that AI can replicate perfectly. Non-linear consciousness propagation—where B not only understands but can generate novel applications and teach C in ways that create branching effects—indicates deep transfer that requires genuine understanding. The branching factor, measured as multiplier above 2.0x in subsequent capability, distinguishes between information copying and consciousness activation.

Bidirectional verification, the core of Reciprocity Principle itself, asks whether both parties can attest to mutual capability gain. One-way help creates dependency—the AI assistance model where one party provides while the other consumes. Two-way exchange creates genuine transfer—the human peer model where both parties improve through interaction. Reciprocity density, calculated as ratio of bidirectional versus unidirectional flows, filters out extractive nodes that take without giving and performative nodes that give without receiving feedback that would improve their own capability.

These three mechanisms are objective, measurable, and falsifiable. They do not require subjective judgment about quality or value. They require demonstrable evidence: does capability persist? Does understanding propagate? Do both parties improve? The answers are binary—yes or no—though the measurements may have graduated precision.

Double-Entry Bookkeeping for Contribution

Traditional accounting succeeds because it tracks both sides of every transaction. Assets increase corresponds to liability increase. Revenue corresponds to obligation. Debit corresponds to credit. If both sides don’t balance, the ledger reveals error immediately. Contribution accounting requires identical structure: when party A contributes to party B, the ledger must track both the capability increase in B (credit) and the recognition claim held by A (debit). When attention transfers from user to platform, the ledger must track both the extraction that occurred and the verified capability that should have been delivered in return.

The Reciprocity Principle implements this double-entry structure through smart contracts that trigger at verification milestones. When A contributes to B, A holds deferred claim on future recognition—not immediate payment, but claim that activates only if verification succeeds. B holds the capability increase itself, which remains unverified initially. Three months later, Persisto Ergo Didici protocol tests whether B’s capability persisted. If yes, first verification passes and first portion of value routes to A. Later, Cascade Proof protocol detects whether B enabled capability in independent party C. If yes with branching factor above threshold, second verification passes and second portion routes. Finally, bidirectional protocol checks whether A also gained capability through B’s feedback. If yes, third verification passes and transaction completes with full settlement.

This structure ensures that extraction without delivery gets tracked as unbalanced transaction. When platforms extract attention but deliver no verified capability, the ledger shows liability: attention captured (debit) with no corresponding capability delivery (credit). That liability accumulates across millions of users creating the debt we currently call attention debt. It’s not metaphorical debt—it’s actual accounting imbalance that compounds over time like any unserviced liability.

Automated Enforcement Through Smart Contracts

Correct accounting requires enforcement. Financial systems use payment processors, legal frameworks, and credit scoring to ensure transactions settle. Contribution accounting requires value routing triggered by verification milestones rather than human judgment or voluntary compliance. Smart contracts provide this automation: when temporal persistence confirms at three-month mark, payment one routes automatically. When cascade branching confirms at six-month mark, payment two routes proportionally to multiplication factor. When bidirectional verification confirms, payment three completes settlement. No human decides whether contribution was ”good enough.” The protocols verify whether specific, measurable outcomes occurred.

This automation is critical because it makes the Reciprocity Principle infrastructure rather than policy. Policy requires central authority, enforcement agencies, compliance monitoring, and penalty mechanisms. Infrastructure requires specification, implementation, adoption, and network effects. Email succeeded as infrastructure, not policy. HTTPS succeeded as infrastructure. DNS succeeded as infrastructure. Once protocols are specified and implementations exist, adoption happens through demonstrated utility rather than mandated compliance.

Platforms can choose not to implement Reciprocity Principle, just as websites could choose not to implement HTTPS. But that choice has consequences: unbalanced ledgers, accumulating debt, structural instability. Eventually, platforms that route value correctly will outcompete platforms that route value to proxies, not because regulators mandate it but because users migrate toward balanced ledgers and away from accumulating insolvency. The market decides, but accounting mathematics constrain what choices lead to sustainable outcomes.

Part 5: What Repayment Actually Looks Like

Automatic Debt Reduction

When value routes to verified contribution rather than engagement proxies, user behavior changes not through moral improvement but through rational allocation. Time spent on capability-generating content produces positive return on investment—verified capability that persists and propagates. Time spent on proxy-optimized content produces negative return—temporary engagement with no lasting capability gain. Rational actors, given accurate information about returns, shift allocation toward positive ROI and away from negative.

This shift happens automatically once verification infrastructure exists. Users don’t need to be taught better values or develop stronger willpower. They need accurate feedback about what generates lasting capability versus what generates momentary engagement. When platforms can demonstrate through temporal and cascade verification which content produces which outcomes, users can make informed decisions. Attention naturally reallocates toward verified value because that allocation produces better outcomes for the user.

Platform incentives follow parallel shift. Currently, revenue ties to engagement metrics—more time spent, more ads shown, more data captured, more revenue generated. But if users migrate toward platforms that verify contribution and away from platforms that optimize proxies, revenue models must adapt. Platforms that route value to verified contribution retain users. Platforms that continue routing to unverified proxies lose users to competitors who demonstrate capability increases. This is not because platforms become ”good” through moral transformation. This is because accounting correction changes what’s profitable.

Extraction Becoming Unprofitable

Under current proxy-based routing, high engagement generates high revenue regardless of capability delivery. Addictive design patterns that maximize engagement while delivering zero verified contribution are profitable strategies. Platforms optimize for attention capture, not capability increase, because that’s what their business model rewards. Users experience this as attention debt—systematic extraction without verified return—but platforms experience it as successful monetization.

Under verified-contribution routing, this reverses. High engagement without verification generates no revenue trigger. Capability increase verified through temporal persistence, cascade multiplication, and bidirectional exchange generates payment flows to contributors. Platforms optimizing for engagement without verification burn through users while accumulating liabilities they cannot service. Addictive design becomes unprofitable because it extracts attention without delivering the verified value that now determines routing. Parasitic platforms that take without verifying delivery die. Symbiotic platforms that increase capability thrive.

This shift requires no moral appeals about what platforms ”should” do. It requires infrastructure that makes extraction without verification unprofitable. The Reciprocity Principle creates this infrastructure not by prohibiting extraction but by requiring verification. Extraction itself is not error—unverified extraction is error. Once verification becomes standard, only verified extraction remains profitable. The behavior changes because the accounting changes.

Restoration as Dominant Strategy

When verified contribution determines value routing, content creators optimize for temporal persistence, cascade multiplication, and bidirectional value rather than for engagement hacks, clickbait optimization, and algorithm gaming. They do this not because they’re more ethical but because verification metrics reveal what actually generates returns. Creating content that helps users develop lasting capability that propagates to others generates verified value that routes back to the creator. Creating content that maximizes engagement without capability transfer generates no verified value and therefore no routing.

Platforms optimize for verification infrastructure rather than attention capture infrastructure. Can we prove capability increases? Can we route value to verified contributors? Do network effects compound quality rather than merely spreading popularity? These questions become central because platforms that answer them well retain users and content creators while platforms that answer them poorly lose both. The dominant strategy shifts from extraction to restoration—not through regulation forcing the shift but through accounting correction making restoration profitable.

This is the nature of infrastructure-level change. Individual intentions become less relevant because structural incentives align toward measurable outcomes. When double-entry bookkeeping made theft and error detectably costly, accounting improved not because all bankers became honest but because the infrastructure made dishonesty unprofitable. When Reciprocity Principle makes unverified extraction detectably costly, attention allocation will improve not because all platforms become ethical but because infrastructure makes extraction without verification unprofitable.

Part 6: The Final Inversion

Not Behavioral, Structural

Attention debt is commonly attributed to individual weakness, platform malice, or cultural decay. Users supposedly lack self-control. Platforms supposedly manipulate intentionally. Society supposedly becomes shallow. These attributions treat attention debt as moral failure requiring ethical correction. But attention debt is structural consequence of routing value to wrong variables. It accumulates regardless of individual virtue, corporate intention, or cultural health.

Consider a thought experiment: perfectly disciplined users who never fall for engagement tricks, perfectly ethical platforms that avoid all manipulation, perfectly healthy culture that values depth and focus. If these ideal conditions existed but the ledger still routed value to engagement proxies rather than verified contribution, attention debt would still accumulate. Users would still transfer attention. Platforms would still optimize for measured metrics. Value would still flow to whatever gets measured. If what gets measured is engagement rather than capability, the mismeasurement creates debt regardless of anyone’s ethics.

This is why ethical solutions cannot fix the problem. They address intentions while leaving structure intact. A bank that tracks deposits incorrectly cannot fix its insolvency through moral improvement in its employees. It must correct its ledger. A platform ecosystem that routes value to engagement proxies cannot fix attention debt through better design principles or user education. It must correct its routing mechanism. The problem is mathematical, not moral.

Too Little Verification, Not Too Much Extraction

The conventional narrative frames platforms as extracting too much attention, creating debt through overextraction. But this misidentifies the error. Consider banking parallel: banks become insolvent not because they hold too many accounts but because they fail to verify deposits and withdrawals correctly. If a bank processed millions of transactions without tracking which were deposits versus withdrawals, insolvency would result not from high transaction volume but from verification failure.

Platform attention works identically. The problem is not that platforms enable many interactions—the problem is that platforms route value based on engagement metrics without verifying whether those interactions increased capability. High interaction volume without verification creates debt. Low interaction volume with verification creates assets. The volume itself is neutral; the verification determines whether debt or asset results.

This means extraction is not inherently problematic. What’s problematic is unverified extraction—extraction where value routes to signals without confirmation that corresponding capability actually transferred. Verified extraction would mean: user attention directed toward content, capability verifiably increases through temporal and cascade tests, value routes back to whoever created that capability increase. Nothing wrong with this extraction—it’s balanced transaction where both sides deliver and both sides benefit measurably.

The inversion is critical: attention debt was not caused by too much extraction. It was caused by too little verification. Platforms extracted attention but built no infrastructure to verify whether that extraction delivered lasting capability. Without verification infrastructure, all extraction becomes problematic because none of it can be confirmed as balanced transaction. With verification infrastructure, extraction becomes legitimate economic activity—resources allocated toward capability production with measurable returns.

Going Forward

Attention debt will not be solved through mindfulness movements, regulatory frameworks, or ethical design principles, though all of these may reduce harm at the margins. These approaches address symptoms while leaving the structural error—wrong routing variable—intact. Attention debt will be solved by implementing verification infrastructure that makes contribution measurable, routing value to verified contribution rather than engagement proxies, and making unverified extraction unprofitable through market forces rather than moral appeals.

The required infrastructure exists in specified form. Persisto Ergo Didici protocols verify temporal persistence. Cascade Proof protocols verify propagation effects. Reciprocity Principle protocols verify bidirectional value exchange. Smart contracts can route value automatically when verification milestones trigger. The technology is buildable. The specifications are available. What’s required is recognition that this is accounting problem requiring engineering solution, not behavioral problem requiring moral intervention.

Banks solved deposit verification centuries ago, creating stable financial infrastructure that enabled economic growth at scale. Platforms must solve contribution verification now, creating stable information infrastructure that enables capability growth at scale. The parallel is exact. The solution is equivalent. The barrier is not technical—it is conceptual. Once attention debt is recognized as accounting error rather than psychological crisis, the path to correction becomes clear.

Conclusion: From Crisis Narrative to Ledger Correction

Framing attention debt as crisis invites panic, blame, and moral crusading. These responses feel appropriate to the scale of suffering involved—cognitive overload, compulsive usage, declining attention spans are real harms affecting billions. But crisis framing does not lead to effective solutions because it misidentifies the problem type. Attention debt is not emergency requiring heroic intervention. It is structural error requiring systematic correction.

Accounting perspective suggests different approach: audit the ledger, identify which variable is being measured versus which variable should be measured, implement infrastructure that measures the correct variable, verify that corrections are occurring, route value accordingly. This is not dramatic. This is not revolutionary. This is maintenance work on information infrastructure, equivalent to debugging code or patching security vulnerabilities. Necessary, technical, achievable.

This framework establishes three necessary conditions for balanced contribution accounting: verification of temporal persistence, measurement of cascade propagation, and confirmation of bidirectional value exchange. Any analysis of attention dynamics that omits these verification mechanisms operates on incomplete accounting—tracking extraction without measuring delivery, recording debits without confirming credits. The Ledger Inversion Theorem demonstrates why such incomplete accounting produces systematic debt regardless of intentions.

Double-entry bookkeeping in the fifteenth century enabled complex trade networks, capital markets, economic growth at scale, and trust between strangers. It did this not by making merchants more ethical but by creating infrastructure that made certain errors and frauds detectably costly. The infrastructure succeeded because it aligned incentives toward accurate accounting regardless of individual virtue.

Double-entry contribution accounting can enable verified learning at scale, capability routing markets, information economy without extraction, and trust between AI systems and humans. It will do this not by making platforms more ethical but by creating infrastructure that makes unverified extraction detectably costly. The infrastructure will succeed because it aligns incentives toward verified contribution regardless of anyone’s moral commitments.

The Reciprocity Principle is not policy proposal requiring government implementation, not ethical framework requiring moral agreement, not technology platform requiring corporate adoption. It is accounting specification for how to track both sides of contribution transactions, verification protocol for how to prove capability actually increased, routing mechanism for how value flows to verified contribution, and double-entry ledger for making human capability machine-readable and automatically rewardable.

Implement it. Test it. Measure it. If attention debt shrinks, the accounting correction worked. If attention debt persists, the specification needs refinement. But frame it correctly: this was never crisis requiring rescue. This was always accounting error requiring correction. The debt exists because we routed value to wrong variable for two decades. The technology exists. The protocols are specified. The choice is binary: correct the ledger, or compound the insolvency. No third option exists in accounting.

Further reading and Related Infrastructure

Web4 implementation protocols:

AttentionDebt.org — Diagnostic infrastructure documenting how attention fragmentation destroyed cognitive substrate necessary for capability development, making synthesis-dependent completion inevitable and verification crisis structural rather than pedagogical.

ReciprocityPrinciple.org — Value routing mechanism enforcing double-entry bookkeeping for human contribution through automated verification of temporal persistence, cascade multiplication, and bidirectional exchange when platforms route to engagement signals rather than verified capability.

TempusProbatVeritatem.org — Foundational principle establishing temporal verification as necessity when momentary signals became synthesis-accessible.

MeaningLayer.org — Semantic infrastructure enabling AI access to 100% knowledge through meaning connections rather than 30% platform fragments.

PortableIdentity.globall — Cryptographic identity ownership ensuring verification records remain individual property across all platforms.

PersistenceVerification.global — Temporal testing protocols proving capability persists independently across time.

CogitoErgoContribuo.org — Consciousness verification through contribution effects when behavioral observation fails.

CascadeProof.org — Verification methodology tracking capability cascades through mathematical branching analysis.

PersistoErgoDidici.org — Learning verification through temporal persistence when completion became separable from capability.

ContributionGraph.org — Cryptographically signed datastructure recording verified contributions with temporal proofs, cascade effects, and bidirectional attestations, making human value history portable across all platforms independent of institutional intermediaries.

CausalRights.org — Constitutional framework establishing rights Web4 infrastructure must protect.

ContributionEconomy.global — Economic models where verified contributions replace jobs as basis for human participation.

Together these provide complete protocol infrastructure for civilization operating under post-synthesis conditions where observation provides zero information about underlying reality and only temporal patterns reveal truth.

This article represents structural analysis of information system architecture. Implementation occurs at protocol level. No central authority required. No moral agreement necessary. Engineering problem. Engineering solution.

Rights and Usage

All materials published under AttentionDebt.org—including accounting error analysis, verification infrastructure frameworks, Ledger Inversion Theorem formulations, and contribution accounting methodologies—are released under Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA 4.0).

This license guarantees universal access and prevents private appropriation while enabling collective refinement through perpetual openness requirements.

Attention debt diagnostic infrastructure and verification protocols are public infrastructure accessible to all, controlled by none, surviving any institutional failure.

Source: AttentionDebt.org

Date: January 2026

Version: 1.0