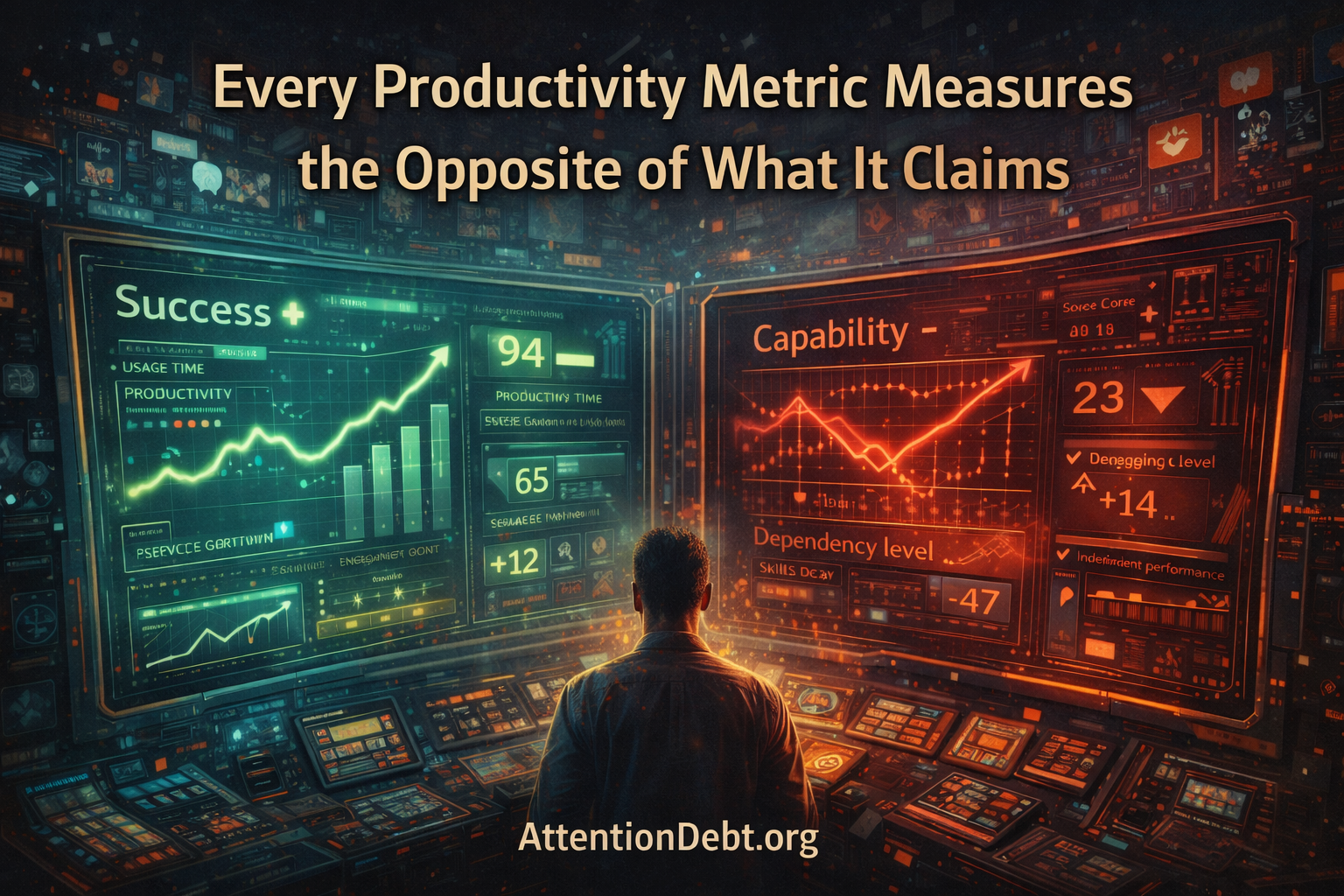

When output rises while capability to produce that output independently collapses, we call it ”productivity growth.” We should call it ”dependency acceleration.” Current measurement infrastructure systematically inverts success and failure.

I. The Inversion Nobody Measured

Every dashboard shows success. Productivity rising. Efficiency improving. Output accelerating. Time-to-completion decreasing. Error rates falling. Customer satisfaction increasing. Employee performance exceeding targets. Revenue growing faster than costs. Every measured signal indicates systems are working, optimization is succeeding, progress is occurring.

Yet something feels fundamentally wrong. Organizations cannot function when tools fail. Employees cannot perform basic tasks without continuous assistance. Teams collapse when software becomes unavailable. Professionals require constant access to platforms they did not need years ago. Capability that should exist – given all the training, all the tools, all the optimization – mysteriously vanishes when support systems experience even brief interruption.

This is not contradiction between metrics and reality. This is Productivity Inversion: the systematic measurement of dependency acceleration as capability improvement, extraction as enhancement, fragility as efficiency. The metrics are accurate. The interpretation is backwards. What we measure as ”productivity” is often the opposite – systematic capability replacement hidden by performance that requires continuous tool availability.

The inversion is not random measurement error. It is structural consequence of measuring performance with assistance present while never testing capability that persists when assistance ends. This creates perfect conditions for optimization to select dependency over capability while every dashboard confirms success.

High productivity becomes evidence of high dependency. Rising efficiency indicates declining resilience. Improved performance suggests increased fragility. Better metrics mean worse reality – but the inversion remains invisible because we measure during assistance-enabled performance and never verify what persists when assistance is removed.

The test revealing inversion is simple: measure performance with tools present, remove all assistance, wait sufficient time for temporary patterns to fade, test independent capability at comparable difficulty. If capability persists – metrics measured genuine productivity. If capability collapsed – metrics measured dependency acceleration disguised as productivity growth. The gap between assisted performance (measured) and independent capability (unmeasured) is the gap between what dashboards show and what actually exists.

II. Why All Standard Metrics Invert

The mechanism making productivity measurement systematically backwards is not technical failure but architectural inevitability: current metrics measure state during optimal conditions rather than resilience across varied conditions. This guarantees inversion when tools shift from enhancing capability to replacing it.

Productivity measures output per unit input. More completed tasks per hour worked. Higher quality deliverables per employee. Increased revenue per dollar spent. These metrics improve when:

- Workers complete more with same effort (genuine productivity)

- Tools complete more while workers do less (dependency creation)

Standard measurement cannot distinguish these. Both show as productivity increase. Optimization selects whichever path shows faster metric improvement. When tools can complete work more efficiently than workers can develop capability to complete it independently, optimization systematically selects tool dependency over human capability development – while productivity metrics show continuous improvement.

Efficiency measures resource minimization. Less time per task. Fewer errors per transaction. Reduced cost per outcome. These metrics improve when:

- Processes genuinely streamline (real efficiency)

- Assistance eliminates struggle that builds capability (efficiency through extraction)

Standard measurement treats these identically. Both reduce resource consumption. Optimization toward efficiency systematically eliminates cognitive friction – the struggle that builds lasting capability. Each efficiency gain that removes struggle prevents capability development that would enable future independence. Efficiency metrics show success. Independent capability silently degrades.

Performance measures output quality. Better results. Higher standards met. Increased customer satisfaction. These metrics improve when:

- Capability genuinely increases (performance improvement)

- Assistance quality improves while human capability stagnates (performance illusion)

Standard measurement cannot separate these. Both produce excellent output. When AI can generate expert-level work faster than humans can develop expert-level capability, optimization selects AI generation over capability development. Performance metrics show continuous improvement. Independent capability remains static or declines.

Growth measures expansion. More customers served. Larger markets captured. Increased revenue generated. These metrics improve when:

- Genuine value creation scales (sustainable growth)

- Dependency relationships multiply (growth through extraction)

Standard measurement shows both as success. Growth through creating users who need continuous platform access appears identical to growth through creating genuinely more capable users. Optimization selects whichever growth path is faster. When dependency creation scales more rapidly than capability building, growth metrics rise while user independence falls.

The pattern repeats across all standard business metrics: they measure performance during assistance-available conditions without testing capability that survives assistance-unavailable conditions. This architectural gap guarantees inversion – optimization improves measured metrics by creating unmeasured dependency, extraction appears as enhancement, fragility appears as efficiency, and every dashboard shows success while systematic failure occurs.

III. The Test That Reveals Everything

The inversion becomes measurable through temporal verification protocol so simple its absence from standard practice reveals how thoroughly optimization selected dependency over capability:

Measure baseline capability. Test what individuals, teams, organizations can accomplish without assistance. Establish independent performance level – the capability floor when all tools, platforms, AI systems become unavailable. This baseline shows what genuinely persists as human capability versus what requires continuous support.

Track assisted performance. Measure productivity, efficiency, output quality with all assistance present. Document the performance ceiling – maximum capability when optimal tools are available. Standard metrics measure only this ceiling, treating it as actual capability rather than borrowed performance.

Remove all assistance. Eliminate access to AI, platforms, tools that enabled performance improvements. Not brief interruption – sustained absence long enough that performance patterns requiring continuous assistance cannot persist. Weeks or months, not hours or days.

Verify independent capability. Test whether performance remains at assisted levels when assistance is removed. Present tasks at comparable difficulty to what was accomplished with assistance. Measure whether capability persisted or collapsed.

Calculate the delta. The gap between assisted performance and independent capability is the inversion magnitude – how much ”productivity growth” was actually dependency creation. Small gap indicates metrics measured genuine improvement. Large gap indicates metrics measured extraction disguised as enhancement.

This protocol implements Persisto Ergo Didici – ”I persist, therefore I learned” – as falsification test for productivity claims. Not ”you produced excellent work with AI” but ”you can produce comparable work without AI after temporal separation.” Not ”team productivity increased with new tools” but ”team capability persists when tools become unavailable.” Not ”training improved performance metrics” but ”training built capability surviving without training environment.”

The test is not run. Not by organizations measuring productivity. Not by platforms claiming to enhance capability. Not by educational systems certifying skill. Not by employers evaluating performance. Every productivity measurement happens during assistance-available conditions. Independent capability after assistance removal goes unmeasured.

This absence is not oversight. This is optimization: measuring only during optimal conditions guarantees metrics improve regardless of whether improvement is genuine or illusory. Testing during suboptimal conditions risks revealing optimization created dependency rather than capability. Better to never test and assume performance indicates capability than verify and discover performance was borrowed rather than developed.

IV. What Boards Are Measuring Without Knowing It

Executive dashboards display productivity metrics intended to show organizational capability: output per employee, revenue per customer, efficiency per process, growth per quarter. These metrics are treated as capability indicators – proof the organization became more capable of producing value. But when productivity inversion occurs, these metrics measure the opposite: systematic capability extraction hidden by performance requiring continuous tool availability.

”Productivity per employee increased 40%” might mean:

- Employees became 40% more capable independently (genuine productivity)

- Employees became 40% more dependent on AI completing their work (productivity inversion)

Standard reporting cannot distinguish these. Both show as 40% productivity increase. Only testing independent capability after AI removal reveals which interpretation is accurate. If capability persists – genuine productivity. If capability collapsed – inversion occurred while dashboard showed success.

”Customer acquisition cost decreased 25%” might mean:

- Sales process genuinely improved efficiency (real optimization)

- AI replaced sales capability while team lost ability to sell independently (efficiency through extraction)

Standard metrics treat these identically. Both reduce cost per customer. Only verifying sales team can maintain conversion rates without AI assistance reveals whether efficiency gain was genuine or illusory. Dashboard shows 25% improvement either way.

”Training completion rates reached 95%” might mean:

- Training successfully built skills in 95% of employees (learning occurred)

- 95% of employees completed training with AI assistance without developing capability persisting independently (completion theater)

Standard certification measures completion, not persistence. Only temporal testing proving employees can perform certified skills months later without assistance reveals whether training built or documented capability. Metrics show 95% success regardless.

”Revenue grew 50% year-over-year” might mean:

- Company created 50% more genuine value (sustainable growth)

- Company created 50% more dependency relationships requiring continuous platform access (growth through extraction)

Standard growth metrics cannot separate these. Both generate revenue. Only testing whether customers could maintain value creation if platform became unavailable reveals growth source. Dashboard reports 50% growth either way.

This creates board-level epistemological crisis: the metrics boards use to evaluate success and allocate resources systematically invert when productivity comes from dependency creation rather than capability building. Every dashboard can show green. Every trend can point up. Every metric can exceed targets – while the organization systematically loses capability to function independently when tools fail, platforms change, or assistance becomes unavailable.

The crisis is invisible in standard reporting because reporting measures performance during optimal conditions. Resilience during suboptimal conditions goes unmeasured. Dependency becomes visible only when dependency-enabling conditions change – exactly when it is too late to rebuild the independent capability optimization extracted.

V. The Economic Incentive Toward Inversion

Why productivity inversion is not occasional measurement error but systematic optimization outcome: current economic incentives reward dependency creation more than capability building when both appear as productivity growth in standard metrics.

Capability building is slow. Developing genuine independent expertise requires extended time, sustained practice, productive struggle. Training employees to expert level takes years. Building organizational capability requires patient investment. Creating customer proficiency demands ongoing education. Genuine capability development shows minimal immediate metric improvement.

Dependency creation is fast. Providing tools that complete work for users generates immediate performance improvement. Implementing AI that augments output creates instant productivity gains. Deploying platforms handling complexity for customers produces rapid efficiency increases. Dependency creation shows maximum immediate metric improvement.

Markets reward speed. Quarterly reporting cycles prioritize immediate gains over long-term capability. Growth metrics value rapid scaling over sustainable development. Efficiency optimization selects fastest improvement regardless of resilience cost. When both capability building and dependency creation appear as productivity growth but dependency is faster, optimization systematically selects dependency.

Costs appear identical. Both require investment – training for capability, tools for dependency. Standard accounting treats these equivalently. ROI calculated through productivity improvement appears similar whether improvement is genuine or borrowed. Only testing what persists when investment ends reveals the difference. That testing is not standard practice.

Failures appear different. Capability building failure is visible immediately – training did not work, productivity did not improve, investment showed no return. Dependency creation failure is invisible until conditions change – tools fail, platforms change, assistance becomes unavailable. By then, the dependency is structural and reversal is expensive. Optimization selects invisible delayed failure over visible immediate failure.

Switching costs diverge. Building genuine capability creates transferable value – employees can apply skills across contexts, organizations maintain function across different tools, customers use capability with any platform. Creating dependency creates lock-in – employees cannot function without specific tools, organizations cannot switch platforms without performance collapse, customers cannot migrate without capability loss. Transferable value and lock-in both appear as productivity growth. Only one creates competitive advantage for the organization. Only the other creates competitive advantage for the tool provider.

This incentive structure guarantees productivity inversion when:

- Standard metrics cannot distinguish capability from dependency

- Dependency creation shows faster immediate improvement

- Failure consequences are delayed and hidden

- Switching costs favor tool providers over users

Every actor rationally optimizes toward dependency creation while calling it productivity growth. Not through deception – through measurement infrastructure making dependency and capability indistinguishable while rewarding whichever improves metrics fastest. Dependency wins. Metrics show success. Inversion is systematic.

VI. Why This Makes Traditional Competition Meaningless

Productivity inversion fundamentally changes competitive dynamics because companies can no longer reliably determine whether they are becoming more capable or more dependent relative to competitors. Standard competitive analysis relies on comparing productivity metrics. When metrics invert, comparison becomes misleading.

Company A shows 30% higher productivity than Company B. Traditional analysis: Company A is more competitive. Inversion possibility: Company A created 30% more dependency while Company B built actual capability. Company A appears dominant while being structurally fragile. Company B appears inefficient while being genuinely resilient.

Standard competitive intelligence cannot distinguish these scenarios. Both companies report productivity numbers. Only stress testing reveals which company maintains function when tools fail. That testing typically occurs during crisis – when competitive position already crystallized based on inverted metrics.

Startup C demonstrates 10x efficiency versus incumbent D. Traditional analysis: Startup will disrupt incumbent through superior productivity. Inversion possibility: Startup optimized dependency creation (fast metric improvement) while incumbent built genuine capability (slow metric improvement). Startup grows rapidly through users who cannot leave. Incumbent grows slowly through users who become genuinely capable.

Markets reward Startup C with higher valuation based on growth metrics. Dependency appears as moat. Extraction appears as value creation. Only testing what happens when platform changes reveals whether users are locked-in through dependency or retained through genuine value. By then, market cap already reflects inverted interpretation.

Industry E shows 5-year productivity growth trend. Traditional analysis: Industry becoming more efficient, value creation improving, progress occurring. Inversion possibility: Industry systematically replacing human capability with tool dependency, creating structural fragility while metrics show improvement. Productivity rising. Independent capability declining. Progress is backwards while all signals indicate forwards.

Entire industries can optimize toward systematic inversion without realizing it. Every company shows productivity improvement. Every competitor demonstrates efficiency gains. Every metric trends positive. Yet aggregate capability to maintain output when tools fail steadily declines. Industry appears healthy while becoming comprehensively dependent on continued platform availability.

This makes traditional competitive strategy unreliable: strategies based on productivity metrics may optimize toward dependency creation when competitors building genuine capability would achieve sustainable advantage. But genuine capability building shows slower immediate metric improvement, making it appear competitively inferior in standard analysis. By the time inversion becomes visible through tool failure or platform change, competitive positions based on inverted metrics are entrenched.

VII. The Productivity Paradox Explained

For decades, economists observed ”productivity paradox”: massive technology investment showing minimal productivity growth in aggregate statistics. This appears contradictory – if tools increase individual productivity measurably, why doesn’t aggregate productivity increase proportionally?

Productivity inversion explains this: individual productivity metrics measure performance with tools present. Aggregate productivity measures output relative to total inputs including dependency on continued tool availability. When individual productivity comes from dependency creation rather than capability building, aggregate productivity shows minimal improvement despite individual metrics rising dramatically.

Individual level: Worker A adopts AI tool, completes tasks 50% faster, productivity metric increases 50%. This measurement is accurate – Worker A does complete tasks 50% faster with the tool.

Aggregate level: All workers adopt similar tools, individual productivity shows similar increases, but aggregate output relative to total system inputs (including maintaining tool availability, managing dependency, handling tool failures) increases minimally. This measurement is also accurate – system as whole did not become 50% more productive despite individual metrics suggesting it should.

The paradox resolves when understanding individual metrics measured performance with tools while aggregate metrics measured resilience across conditions including tool unavailability. Individual optimization toward dependency creation (fast metric improvement) creates aggregate fragility (minimal resilience improvement). Individual metrics invert – showing capability increase when dependency increase occurred. Aggregate metrics reveal inversion through paradoxically small improvement.

This explains why:

Technology investment accelerates but growth stagnates. More tools, platforms, AI systems deployed. Individual productivity metrics show continuous improvement. Aggregate economic growth remains modest. Standard interpretation: technology not as valuable as hoped. Inversion interpretation: technology creating dependency faster than capability, showing as individual productivity while creating aggregate fragility.

Training budgets increase but capability complaints intensify. More money spent on employee development. Completion rates improve. Performance metrics rise. Yet employers report graduates cannot perform basic functions, new hires lack fundamental skills, experienced employees struggle with novel problems. Standard interpretation: training quality declining despite investment. Inversion interpretation: training optimized completion metrics through dependency creation, showing as success while capability building ceased.

Educational spending rises but learning outcomes plateau. More resources per student. Better technology access. Higher test scores. Yet independent capability to learn, think critically, solve problems without assistance shows minimal improvement or declines. Standard interpretation: education system failing despite investment. Inversion interpretation: education optimized measured outcomes through assistance that prevented genuine learning, showing as improvement while capability development stopped.

The pattern repeats: investment increases, individual metrics improve, aggregate outcomes stagnate or decline. This is not paradox. This is inversion – optimization toward metrics that systematically measure dependency as productivity, extraction as enhancement, fragility as efficiency. Individual metrics invert. Aggregate reality reveals inversion through minimal improvement despite apparent individual success.

VIII. What Happens When Everyone Inverts Simultaneously

The catastrophic scenario is not individual organizations discovering their productivity metrics inverted. The catastrophic scenario is entire economic sectors inverting simultaneously without realizing it – every competitor optimizing dependency creation, every dashboard showing success, aggregate capability declining while all measured signals indicate improvement.

This creates Systemic Inversion: state where productivity measurement infrastructure itself becomes comprehensively backwards across an entire ecosystem. Not isolated incidents of metrics misleading. Systematic condition where all standard measurement shows opposite of reality for all participants simultaneously.

Systemic Inversion has occurred when:

Every organization shows productivity growth. All competitors demonstrate efficiency improvements. Every metric trends positive. Yet aggregate sector capability to maintain output when dependencies fail has declined. No individual organization realizes this because competitive analysis shows similar patterns across all players. Everyone optimized successfully according to standard metrics. Everyone created systematic dependency without measurement revealing it.

All training shows completion improvement. Every educational program demonstrates higher certification rates. All skill development initiatives show better assessment outcomes. Yet aggregate capability to perform without assistance after temporal separation has decreased. No institution realizes this because benchmarking shows similar patterns across all programs. Everyone improved measured outcomes. Everyone optimized completion while capability building stopped.

Entire industries adopt similar tools. Competitive pressure drives universal platform adoption. Every company uses similar AI, similar software, similar systems. Productivity metrics show industry-wide efficiency gains. Yet industry capability to function when platforms experience disruption has collapsed. No company realizes this because industry standards assume current tool availability continues indefinitely. Everyone followed best practices. Everyone created structural dependency.

Markets reward consistent patterns. Investment flows toward companies showing fastest productivity growth. Capital markets favor efficiency gains. Growth metrics determine valuations. Every actor optimizes toward metrics markets reward. Those metrics systematically invert when productivity comes from dependency. Markets systematically reward inversion while penalizing genuine capability building that shows slower immediate improvement.

The result is coordinated optimization toward systematic failure while every signal indicates systematic success. Not through conspiracy or coordination. Through every independent actor optimizing toward the same inverted metrics, following the same best practices, adopting the same tools, measuring success through the same dashboards – all of which systematically select dependency over capability while showing continuous productivity improvement.

Recovery from Systemic Inversion is structurally harder than individual inversion because:

No competitive pressure to change. When all competitors invert similarly, relative position appears stable. No company faces pressure to invest in genuine capability building that shows slower metric improvement. Everyone maintains inverted optimization because everyone’s metrics show success and competitive analysis shows similar patterns.

No market signal revealing problem. When markets reward inversion universally, no price signal indicates correction is needed. Capital continues flowing toward fastest apparent productivity growth, which continues coming from fastest dependency creation. Market mechanism that should correct mispricing instead amplifies inversion.

No institution positioned to measure. When measurement infrastructure itself is inverted across entire sectors, no authoritative source can definitively state ”all metrics are backwards.” Every dashboard shows green. Every benchmark indicates success. Claiming systematic inversion appears like rejecting all data rather than revealing measurement failure.

Correction requires coordinated change. Individual organizations cannot easily rebuild capability while competitors optimize dependency creation. Slower metric improvement makes capability building appear competitively inferior. Coordination toward different measurement standards requires collective action against individual incentives. Systemic problems resist individual solutions.

This is the civilization-scale risk: not that some organizations discover their metrics inverted, but that optimization toward universally inverted metrics becomes so entrenched that questioning whether productivity growth represents genuine improvement versus systematic dependency creation appears absurd – until dependencies fail and aggregate capability to maintain output reveals comprehensive inversion occurred while every dashboard showed continuous success.

IX. The Measurement Infrastructure Required

Preventing or correcting productivity inversion requires measurement infrastructure that current systems do not provide and economic incentives do not reward building:

Temporal verification protocols. Standard testing after sufficient time for dependency patterns to become visible. Not immediate performance assessment. Not continuous monitoring during optimal conditions. Periodic independent capability verification after temporal separation from enabling tools. Test what persists, not what performs.

Independence baseline measurement. Establish tool-free capability level before tool adoption, track assisted performance during tool usage, verify independent capability after temporal separation. The delta between baseline and post-temporal capability shows whether tools enhanced or extracted. Positive persistent delta proves genuine improvement. Zero or negative delta reveals inversion.

Comparable difficulty assessment. Test independent capability at complexity matching assisted performance. Not easier testing that inflates capability assessment. Not harder testing that deflates genuine gains. Comparable difficulty reveals whether assisted performance reflected developed capability or borrowed tool capability. Only comparable testing makes persistence meaningful.

Transfer validation. Verify capability applies in contexts different from acquisition environment. If capability does not transfer beyond specific tool environment, it may be tool-specific performance pattern rather than genuine understanding. Transfer testing distinguishes narrow dependency from broad capability building.

Stress condition verification. Test capability during suboptimal conditions – tool unavailability, platform changes, assistance failure. Resilience during stress reveals whether optimization built genuine capability or fragile dependency. Standard measurement during optimal conditions hides fragility until stress naturally occurs.

Longitudinal capability tracking. Measure capability development across extended time, showing whether trajectory indicates compounding improvement or increasing dependency. Capability that compounds shows genuine development. Capability that plateaus or declines despite continued tool usage shows dependency creation. Time-series data reveals patterns invisible in point measurements.

This infrastructure makes productivity inversion measurable. Not through preventing tool adoption. Through requiring proof that adoption built rather than extracted capability. Not through eliminating efficiency optimization. Through demanding verification that efficiency creates resilience rather than fragility.

The infrastructure cannot emerge from organizations whose productivity metrics it would reveal as inverted. Cannot be built by tool providers whose business models depend on dependency creation appearing as capability enhancement. Cannot develop from markets that reward fastest metric improvement regardless of whether improvement is genuine or illusory. Requires independent measurement infrastructure with no stake in whether testing reveals enhancement or extraction.

X. Why This Changes How Success Is Measured

Implementing temporal verification transforms productivity measurement from performance tracking to persistence verification. This architectural shift makes current dashboards unreliable for evaluating whether organizations are becoming more capable or more dependent:

Current measurement: Track output during optimal conditions with all tools available. Assume performance indicates capability. Optimize toward metric improvement regardless of source. Report productivity growth as success.

Persistence measurement: Verify capability after temporal separation without assistance. Test whether performance reflects developed capability or borrowed tool capability. Optimize toward improvements persisting independently. Report productivity growth only when independent capability increased.

The implications cascade across how organizations evaluate success:

Productivity claims become falsifiable. ”Our team is 40% more productive” transforms from unfalsifiable assertion to testable claim requiring temporal verification. Test team capability after tool removal. If capability persists – claim verified. If capability collapsed – claim false, actual statement is ”Our team has 40% dependency increase.”

Tool adoption requires capability verification. Before deploying platforms claimed to enhance productivity, test whether enhancement persists when platform access ends. Design pattern: baseline capability without tool, assisted performance with tool, temporal testing after tool removal. If capability improved persistently – genuine enhancement. If capability requires continued tool access – dependency creation disguised as productivity improvement.

Training certification demands persistence proof. Credentials cannot certify completion without verifying capability persists months later without training environment. Test graduates independently after temporal separation. If capability persists – training built lasting skill. If capability collapsed – training documented exposure without genuine learning.

Competitive analysis includes resilience testing. Comparing productivity metrics becomes meaningful only when including capability persistence verification. Company showing higher productivity with tools may show lower capability without tools after temporal separation. Apparent competitive advantage may be hidden fragility revealed when tool conditions change.

Investment decisions require inversion assessment. Capital allocation based on productivity metrics becomes reliable only when metrics verified through temporal testing. Companies showing fastest productivity growth may show fastest dependency creation. Investment flowing toward inverted metrics systematically funds extraction disguised as value creation.

Economic growth measures persistent value. Aggregate productivity growth counts only when including verification that growth came from capability building rather than dependency creation. Growth measured through performance during optimal conditions systematically overstates genuine value creation when optimization selected dependency over capability.

This is not incremental measurement improvement. This is fundamental reconception of what productivity means: from performance during favorable conditions to capability persisting across varied conditions. From output with assistance to independent function without assistance. From metric improvement to verified enhancement.

The shift inverts optimization incentives: capability building becomes competitively advantageous because it passes temporal verification while dependency creation fails. Genuine productivity becomes measurable and therefore valuable. Extraction becomes visible and therefore costly. Markets can price accurately because metrics no longer systematically invert.

But the shift threatens every organization whose apparent success came from inverted metrics. Every dashboard showing productivity growth without temporal verification becomes suspect. Every competitive advantage based on faster metric improvement without capability verification becomes questionable. Every valuation assuming productivity metrics indicate capability becomes uncertain.

This is why productivity inversion is not technical measurement problem but economic transformation problem: fixing measurement reveals that current optimization systematically selected dependency over capability while all dashboards showed success. Organizations that built genuine capability gain verification of their value. Organizations that created dependency face choice: rebuild toward capability or acknowledge metrics measured extraction they called productivity growth.

Tempus probat veritatem. Time proves truth. And temporal verification proves productivity – distinguishing genuine capability improvement from dependency acceleration when both appear identical in standard metrics showing continuous success.

AttentionDebt.org — The measurement infrastructure for productivity inversion and temporal verification, revealing when optimization selected dependency over capability while all dashboards showed productivity growth.

Protocol: Persisto Ergo Didici — The temporal testing proving whether productivity gains represent genuine capability improvement or systematic dependency creation appearing as enhancement.

Concept: Productivity Inversion — The systematic measurement of dependency acceleration as capability improvement, occurring when metrics track performance with assistance present without verifying capability persisting when assistance ends.

Rights and Usage

All materials published under AttentionDebt.org—including definitions, measurement frameworks, cognitive models, research essays, and theoretical architectures—are released under Creative Commons Attribution–ShareAlike 4.0 International (CC BY-SA 4.0).

This license guarantees three permanent rights:

1. Right to Reproduce

Anyone may copy, quote, translate, or redistribute this material freely, with attribution to AttentionDebt.org.

How to attribute:

- For articles/publications: ”Source: AttentionDebt.org”

- For academic citations: ”AttentionDebt.org (2025). [Title]. Retrieved from https://attentiondebt.org”

- For social media/informal use: ”via AttentionDebt.org” or link directly

2. Right to Adapt

Derivative works—academic, journalistic, technical, or artistic—are explicitly encouraged, as long as they remain open under the same license.

3. Right to Defend the Definition

Any party may publicly reference this framework to prevent private appropriation, trademark capture, or paywalling of the terms ”cognitive divergence,” ”Homo Conexus,” ”Homo Fragmentus,” or ”attention debt.”

No exclusive licenses will ever be granted. No commercial entity may claim proprietary rights to these concepts.

Cognitive speciation research is public infrastructure—not intellectual property.

AttentionDebt.org

Making invisible infrastructure collapse measurable

2025-12-20